Classification and Subject Access, Information Organization

Today

- Classification: What is it?

- Information Extraction: What is it?

- How to apply them: Easy / Better / Better-er / Best

- Coda: Data Analysis

Skill Check

From last week:

- How can AI tools help overcome the “vocabulary problem” in information seeking?

- What is RAG (Retrieval Augmented Generation) and how does it work?

- What are some strengths and limitations of using LLMs for information retrieval?

- List the different parts of the traditional search systems where AI can aid in information seeking.

- What are embedding models and how do they relate to traditional information retrieval approaches?

Key Concepts

Structured vs Unstructured Data

- Structured data: data that is organized in a predefined format, such as numbers, dates, and categorical variables.

- Unstructured data: data that is not organized in a predefined format, such as text, images, audio, and video.

Supervised vs Unsupervised Learning

Supervised Learning: Model learns from labeled training data

- Examples: Classification, regression

- Requires human-annotated data

- More accurate for specific tasks

- E.g., spam detection, sentiment analysis

Unsupervised Learning: Model finds patterns in unlabeled data

- Examples: Clustering, dimensionality reduction

- No human annotation needed

- Good for discovering hidden patterns

- E.g., customer segmentation, topic modeling

Classification

- Classification is the process of categorizing data into predefined categories or classes.

- It is traditionally a type of supervised learning, where the model is trained on labeled data.

As we'll see, few shot prompting of large pretrained models is changing how we approach it!

Information Extraction

- The process of extracting data from unstructured data

- Classification describes the data (metadata), Information Extraction extracts the data in some standardized format(content)

Some Use Cases

Classification

- Identifying spam/phishing emails

- Sentiment analysis; e.g. customer feedback, book reviews

- Customer feedback analysis – e.g. extracting themes from survey responses

- Categorizing reference questions for service improvement

Some Use Cases

Information Extraction

- Meeting note action item extraction

- Classifying library materials by subject headings or call numbers

- Content analysis of research papers – e.g. extracting methodology types, research approaches, key findings, citations

- Identifying themes in qualitative research data

The falling burden of training data

- Historically, training data was a limiting factor - you needed many examples, well-labeled

- Now, with generative AI, we can use fewer examples (few-shot) or even none (zero-shot)

Ad-hoc classification and few-shot expansion

With LLMs, we can do ‘on-the-fly’ classification, where we just describe it.

- e.g. “Here are books I liked …”

- -> “Suggest more”

- -> “Tag them by genre and rate them 1-5 on pulpiness, realism, etc.”

- -> “Write a taste profile for me”

* Ad-hoc: Created or done for a particular purpose as needed, rather than following a pre-existing system

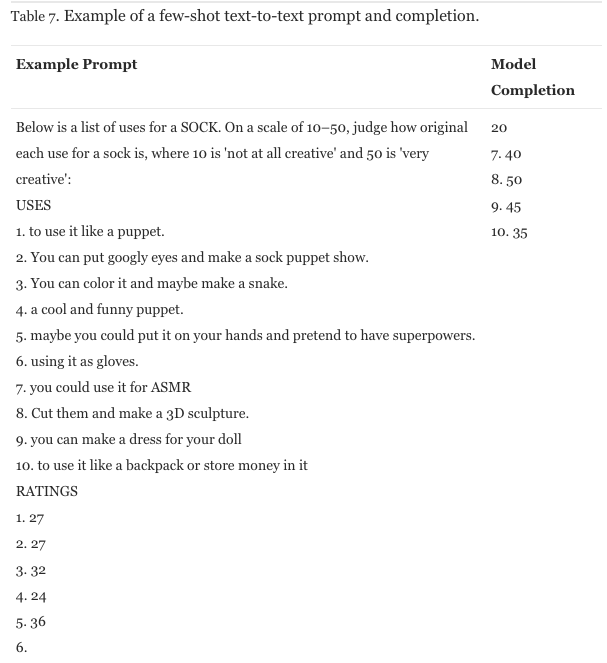

Example from my research: classifying creativity tests

Results

Baseline

- Ranges from 0.12 (LSA, Semdis) - 0.26 (OCS)

LLM Prompted (c.2022)

Zero-Shot

- GPT-3 Davinci: .13

- GPT-3.5: .19

5-Shot

- GPT-3 Davinci: .42

- GPT-3.5: .43

Results (w/GPT-4 & Fine-Tuning)

Fine-tuning a model is better, but prompted models are rapidly improving.

Baseline

- Ranges from 0.12 (LSA, Semdis) - 0.26 (OCS)

LLM Prompted (adding GPT-4)

Zero-Shot

- GPT-4: .53 (vs. .19 for GPT-3.5)

5-Shot

- GPT-4: .66 (vs. .43 for GPT-3.5)

20-Shot

- GPT-4: .70

LLM Fine-Tuned

- T5 Large: .76

- GPT-3 Davinci: .81

How to do classification and information extraction

Easiest Way: Just Ask!

A clear prompt is all you need for many ‘good-enough’ results.

Questions to consider:

- What is the goal?

- How do you want the output to be structured?

- Classification: What are the labels? Is it categorical (discrete), ordinal (ranked), or continuous (numeric)?

- Data Extraction: What schema do you want to use? What properties do you want to extract?

Activity: Basic Sentiment Classification

Example Data

Tasks

- Classify the sentiment of tweets about a clothing company

- Extract topic categories for the tweets

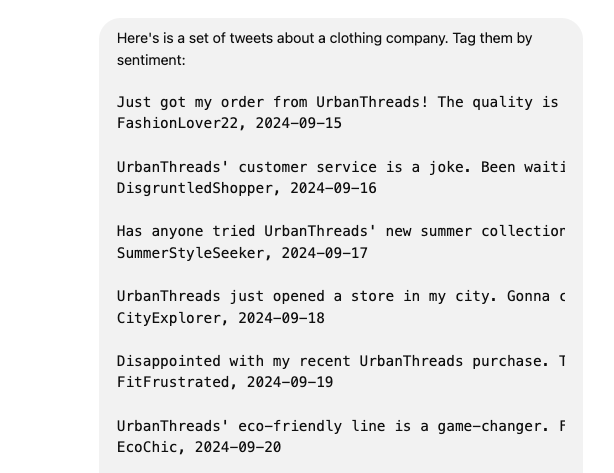

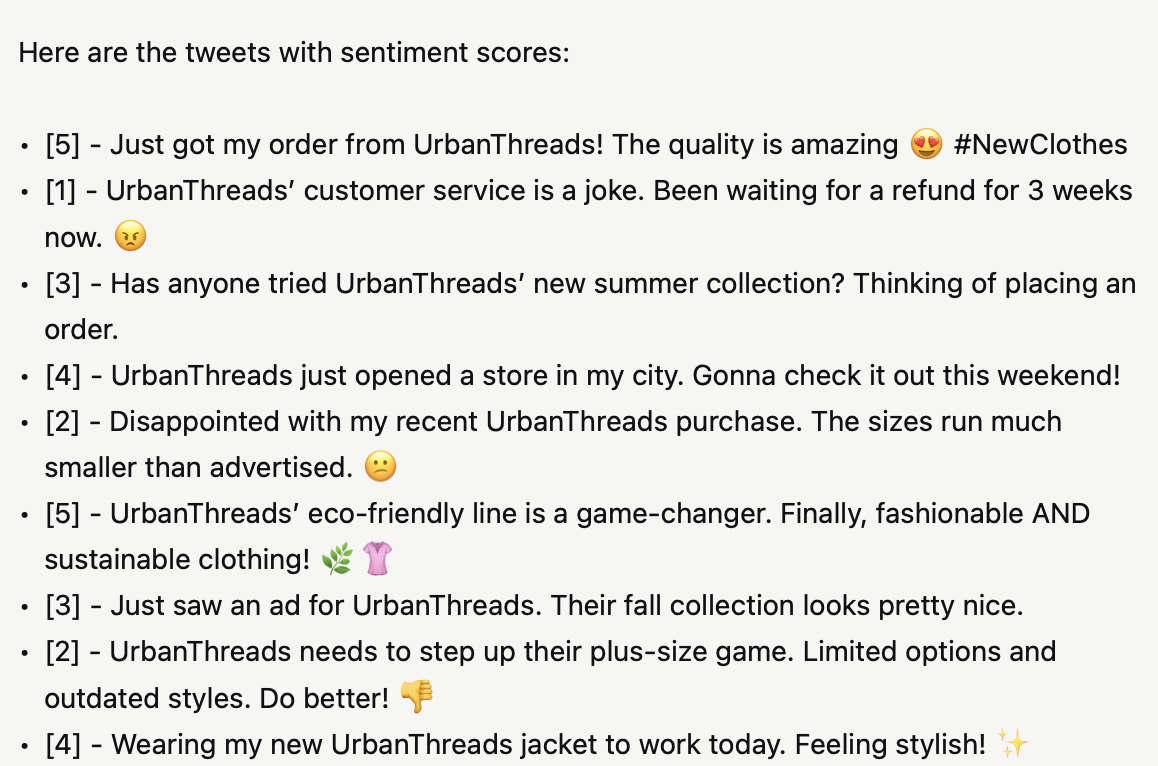

Example: Tweets

A basic classification prompt:

Prompt:

Here's is a set of tweets about a clothing company. Tag them by sentiment:

{tweets}

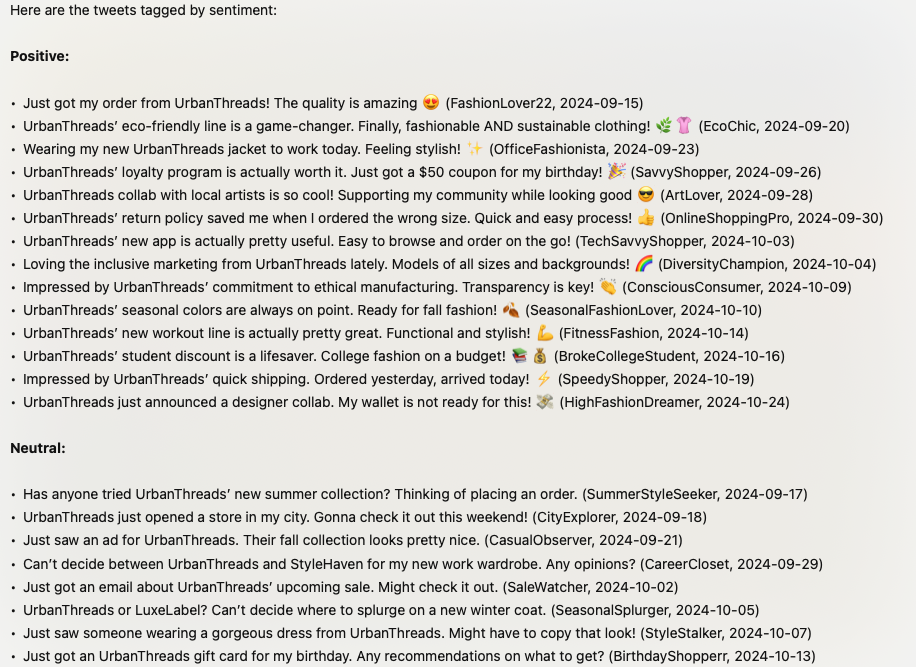

Improve the prompt with more guidance:

Here's is a set of tweets about a clothing company.

Tag each one by sentiment on a scale of 1-5. 1 is negative, 3 is neutral, 5 is positive.

{tweets}

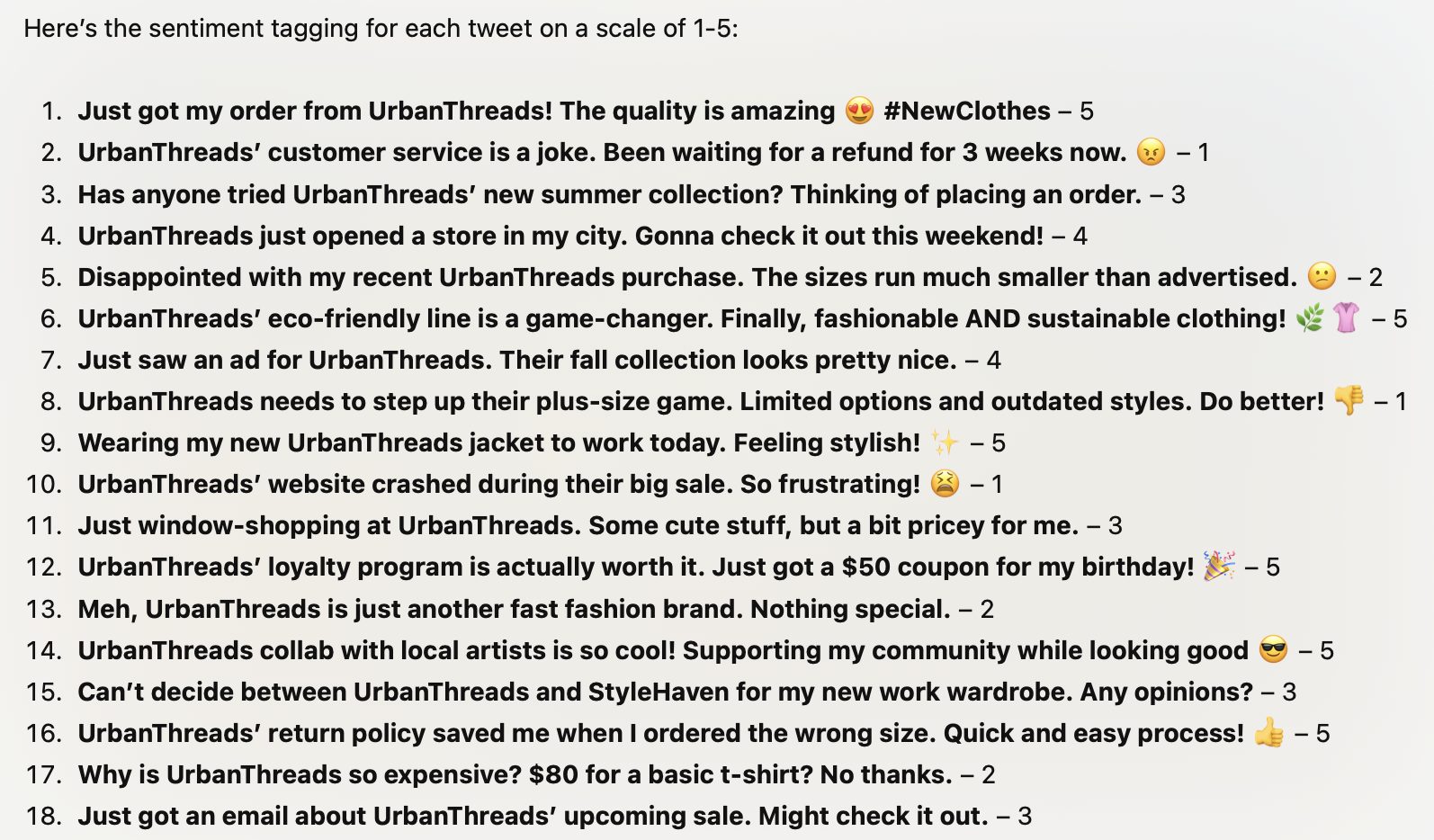

Even more guidance on labeling and few-shot examples and guidance on output format:

Here's is a set of tweets about a clothing company. Tag each one by sentiment on a scale of 1-5. 1 is negative, 3 is neutral, 5 is positive.

# Coding Guidelines

## 1. Very Negative (1)

- Strong negative emotions (e.g., anger, frustration, sadness)

- Harsh criticism or complaints about UrbanThreads

- Use of negative words (e.g., "hate," "terrible," "awful")

- Negative experiences with UrbanThreads products or services

Example: "I absolutely hate UrbanThreads! Their clothes fell apart after one wash. Worst quality ever, never shopping there again! 😡 #UrbanThreadsFail"

... {and so on}

# Format

- [{score}] - {tweet}

# Tweets to Annotate

{tweets}

How to use it: Better

- Provide examples!! Just because zero-shot is good, don’t forget that few-shot is better

- Reasoning models and Chain-of-Thought prompting: Advanced AI models (o1/o3, R1, etc.) that take time to “think” before responding

- Temperature (use the playground!)

- Structured Data Outputs

Temperature and Stochasticity

Even at temperature = 0:

- Internal processes have some randomness

- Results may vary slightly between runs

- Newer models still show variation

Think of it as:

- Temperature = intentionally added randomness

- Base randomness = inherent to model’s processes

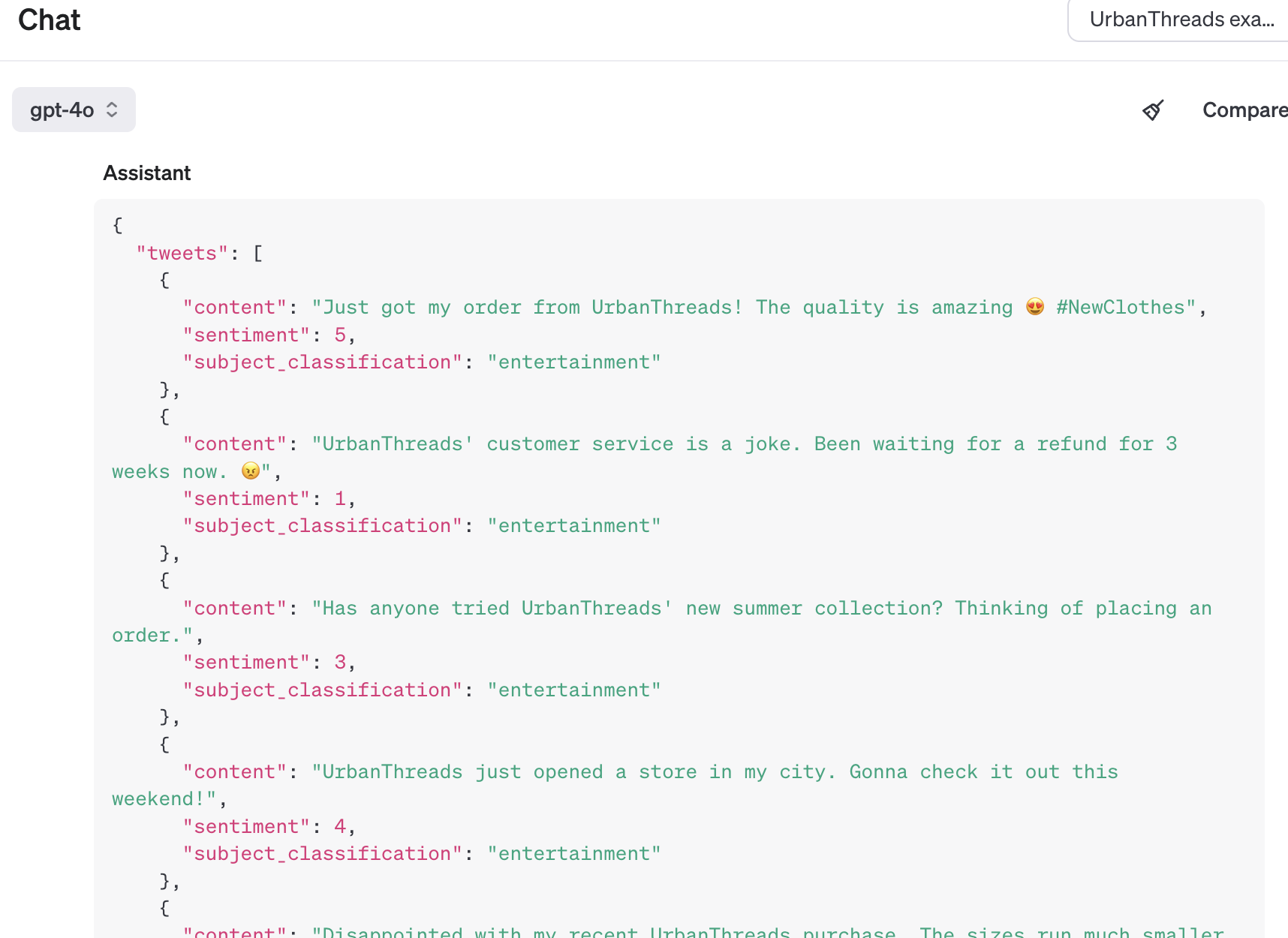

Structured Data Outputs

Language models like structure. Be explicit.

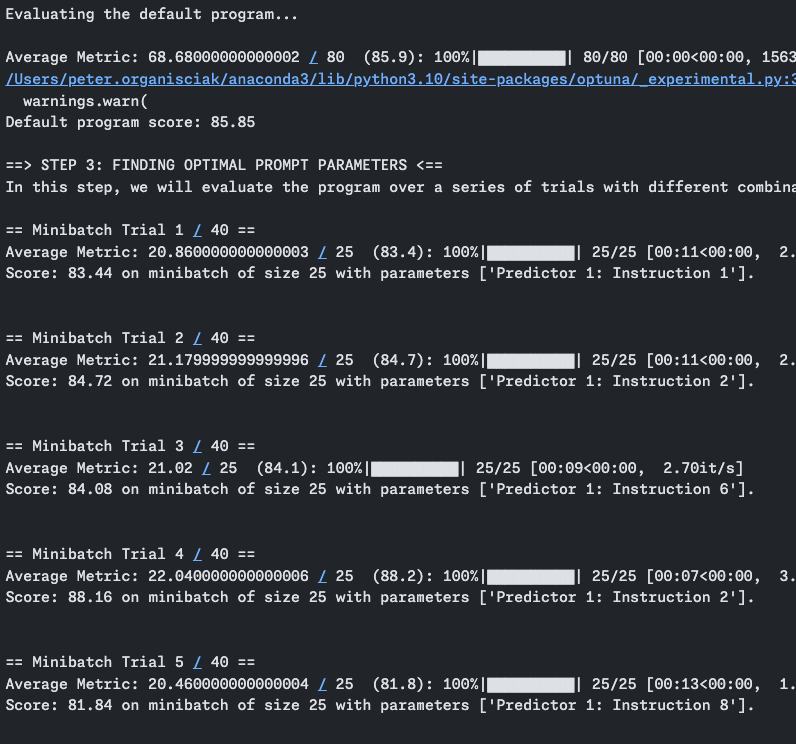

How to use it: Better-er

Optimize prompts with DSPy! https://github.com/stanfordnlp/dspy

How to use it: Best

Fine-tuning!

- Fine-tuning is the process of training a model on a specific task and dataset

- It is a type of supervised learning, where the model is trained on labeled data

- You’re training up from a language model, so the resource use is not prohibitive. E.g.

gpt-4o-miniorgpt-4o - the emergence of GenAI as an industry has made this much easier! You’re not coding neural networks, just uploading data of USER INPUT -> EXPECTED BOT RESPONSE

https://platform.openai.com/finetune

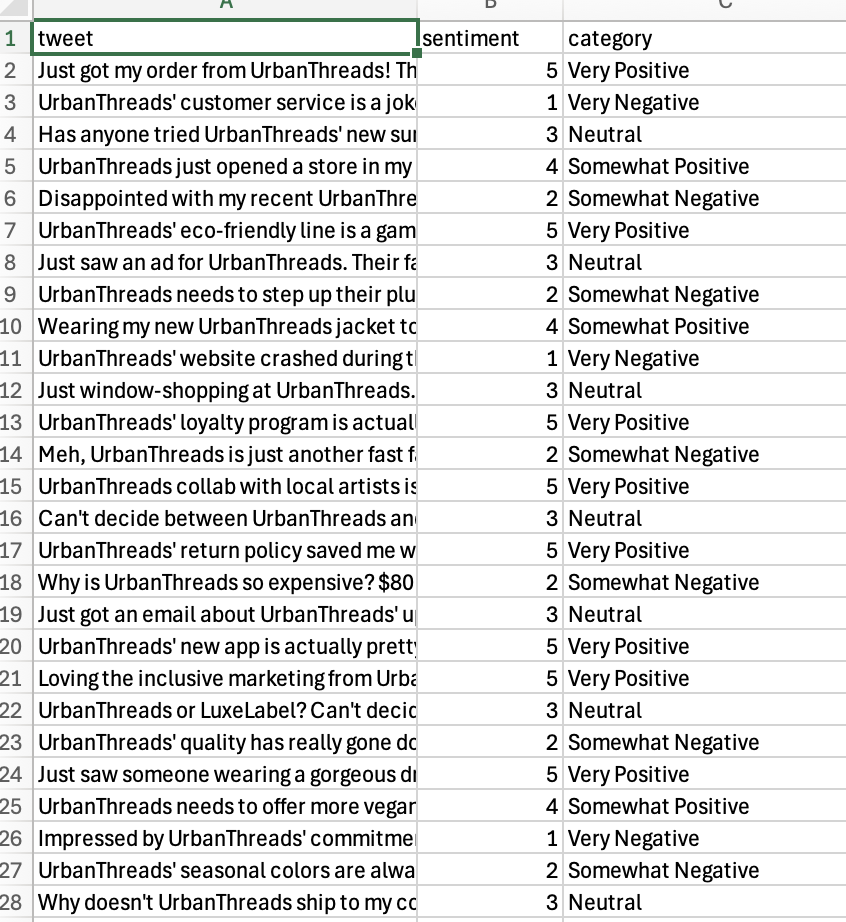

Coda: How do you get the data out?

ChatGPT: Use Code Interpreter

- Request “Write the data to a CSV file with Python”

- Python code will be generated and executed

- Download the resulting CSV file

Claude: Write as artifact

- If you ask Claude to write the output as a Markdown artifact, theres the button on the booton right that let’s you copy the data easy.

Manual Export

- Request “Write the data as a table”

- Copy the formatted table

- Paste directly into Excel/spreadsheet

Example output from ChatGPT:

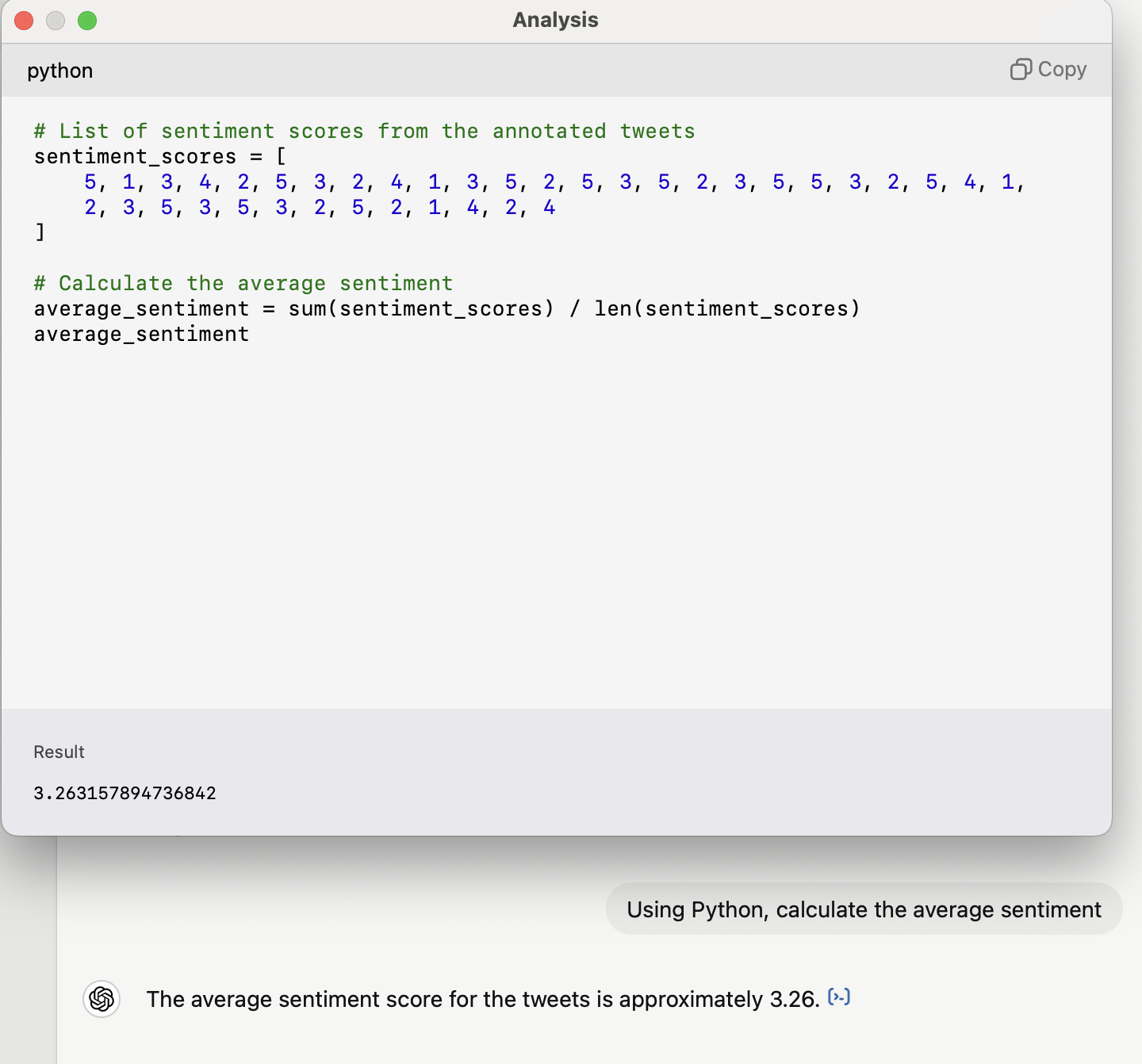

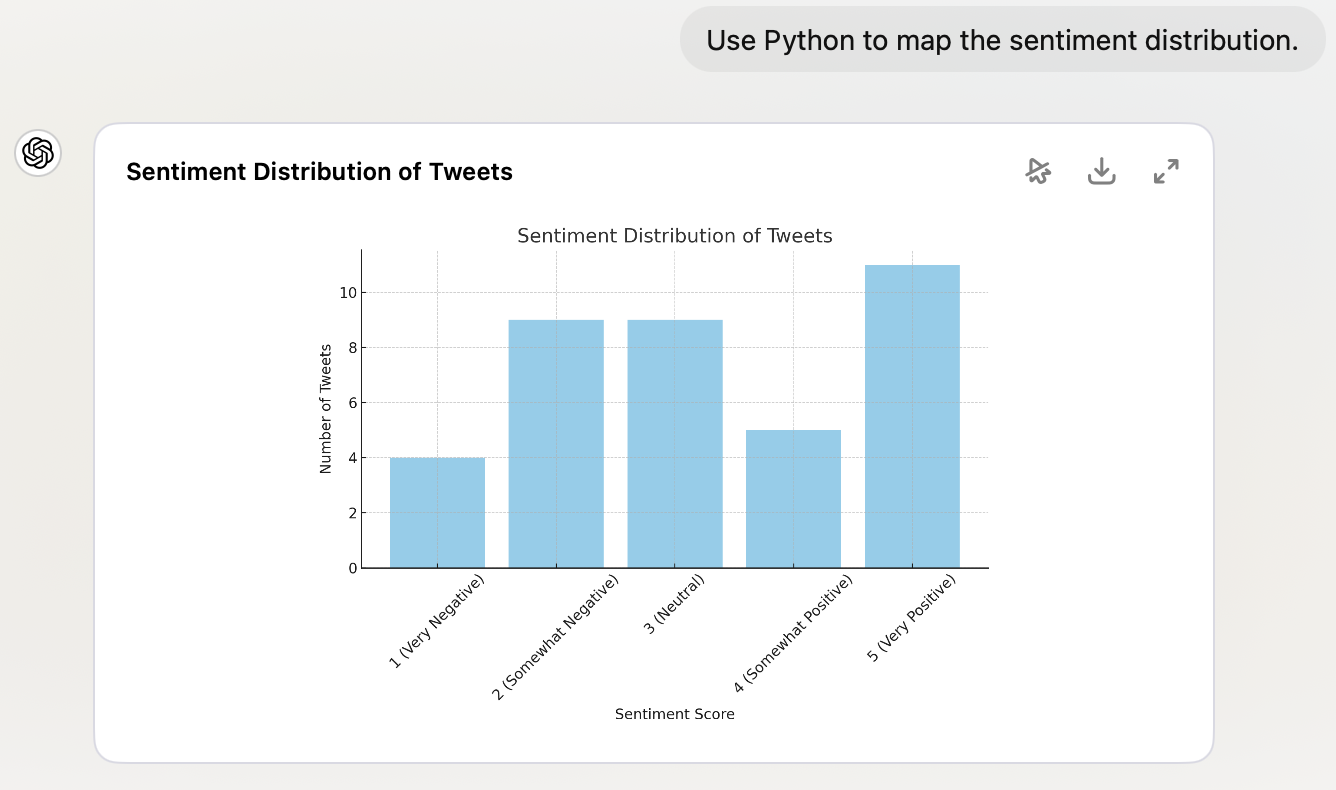

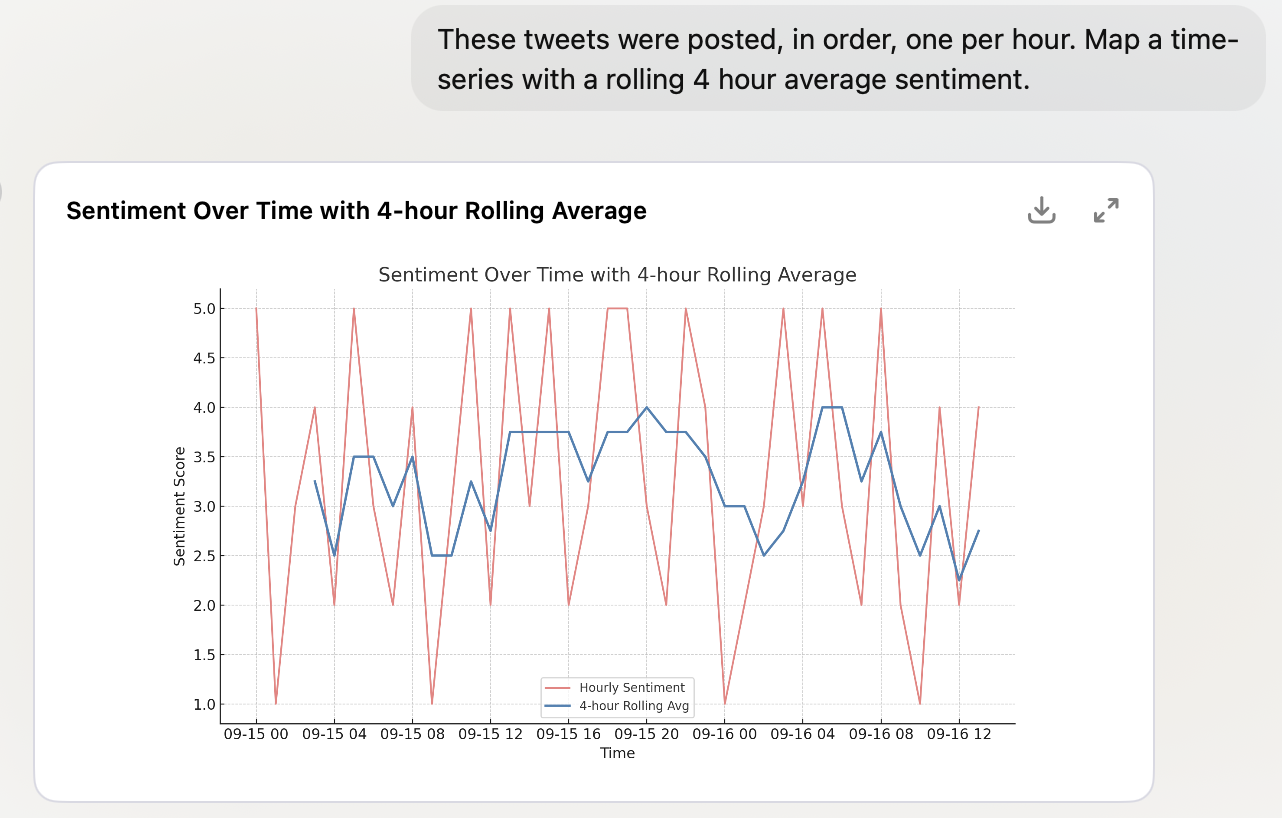

Coda: Data Analysis

What do you do with the data once you have it?

Also use the code interpreter!

GPT can use Python, and Claude can use Javascript. But you don’t need to know Python or Javascript - it will write and run the code for you.

Summary

- Classification and Information Extraction are powerful tools for working with unstructured data

- Prompting with GenAI models, like ChatGPT or Claude - Just ask!

- Provide examples, specify your output, and clarify your schema

- For more trustworthy results:

- Use

o1-preview - Use temperature=0

- Use structured outputs

- Fine-tune your own models

- Use

Questions?

Lab: Classification Prompt Battle

You’ll be writing prompts to ask a larger language model to score data against a dataset.

Lab Details: https://ai.porg.dev/labs/classification

Use the following page for classification: